Quality Estimation: A Smart Filter for Cost-Effective Translation Workflows

17/09/2024

Unlock cost-effective translation workflows using Quality Estimation and Large Language Models for faster, high-quality results and discover how to optimize your translation process.

Author

Amir Kamran joined TAUS as Senior Data Engineer in September 2017, and now works as a Solution Architect. His primary duties include collaborating with engineering and machine learning teams to develop new solutions, NLP tools, and trends that improve TAUS' offerings. He also supports the sales team by designing and implementing proof of concepts for TAUS products and services. Amir holds a master’s degree in Language and Communication Technologies, graduating as an Erasmus Mundus scholar from Charles University in Prague and the University of Malta. He previously worked as a Research Developer on machine translation projects at the University of Amsterdam and Charles University in Prague.

Related Articles

by David Koot

by David Koot09/01/2026

TAUS EPIC API's customizable Quality Estimation models can enhance translation workflows and meet specific needs without requiring in-house NLP expertise.

05/12/2025

Explore how TAUS EPIC API's Quality Estimation can revolutionize translation workflows, that offer scalable, domain-specific solutions for Language Service Providers without the need for in-house NLP experts.

30/10/2025

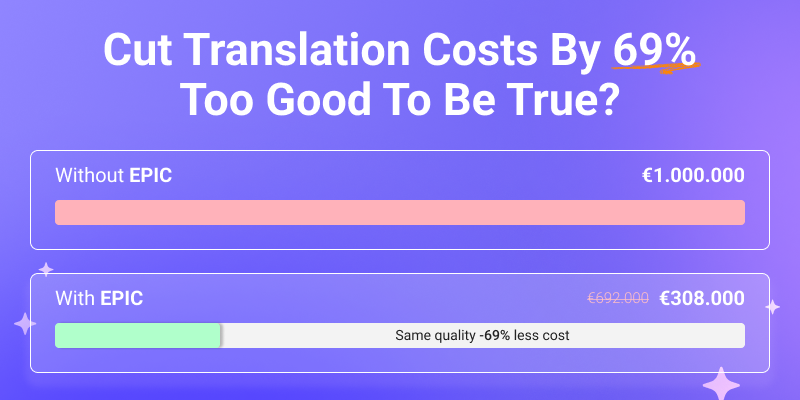

See your translation ROI with Quality Estimation (QE) and Automatic Post-Editing (APE). Find out how EPIC can reduce post-editing costs by up to 70% while improving efficiency.